Data Engineering Fundamentals

The rise of ML in recent years is tightly coupled with the rise of big data. Large data systems, even without ML, are complex. If you haven’t spent years and years working with them, it’s easy to get lost in acronyms. There are many challenges and possible solutions that these systems generate. Industry standards, if there are any, evolve quickly as new tools come out and the needs of the industry expand, creating a dynamic and ever-changing environment.

Data Sources

An ML system can work with data from many different sources. They have different characteristics, can be used for different purposes, and require different processing methods. Understanding the sources your data comes from can help you use your data more efficiently.

One source is user input data, data explicitly input by users. User input can be text, images, videos, uploaded files, etc. If it’s even remotely possible for users to input wrong data, they are going to do it. User input data requires more heavy-duty checking and processing. On top of that, users also have little patience. In most cases, when we input data, we expect to get results back immediately. Therefore, user input data tends to require fast processing.

Another source is system-generated data. This is the data generated by different components of your systems, which include various types of logs and system outputs such as model predictions. Because logs are system generated, they are much less likely to be malformatted the way user input data is. Overall, logs don’t need to be processed as soon as they arrive, the way you would want to process user input data.

Because debugging ML systems is hard, it’s a common practice to log everything you can. This means that your volume of logs can grow very, very quickly. This leads to two problems. The first is that it can be hard to know where to look because signals are lost in the noise. The second problem is how to store a rapidly growing number of logs. Luckily, in most cases, you only have to store logs for as long as they are useful and can discard them when they are no longer relevant for you to debug your current system.

There are also internal databases, generated by various services and enterprise applications in a company. These databases manage their assets such as inventory, customer relationship, users, and more. This kind of data can be used by ML models directly or by various components of an ML system.

Then there’s the wonderfully weird world of third-party data. First-party data is the data that your company already collects about your users or customers. Second-party data is the data collected by another company on their own customers that they make available to you, though you’ll probably have to pay for it. Third-party data companies collect data on the public who aren’t their direct customers.

Data Formats

Once you have data, you might want to store it (or “persist” it, in technical terms). Since your data comes from multiple sources with different access patterns, storing your data isn’t always straightforward and, for some cases, can be costly. It’s important to think about how the data will be used in the future so that the format you use will make sense.

The process of converting a data structure or object state into a format that can be stored or transmitted and reconstructed later is data serialization. There are many, many data serialization formats. When considering a format to work with, you might want to consider different characteristics such as human readability, access patterns, and whether it’s based on text or binary, which influences the size of its files.

| Format | Binary/Text | Human-readable | Example use cases |

|---|---|---|---|

| JSON | Text | Yes | Everywhere |

| CSV | Text | Yes | Everywhere |

| Parquet | Binary | No | Hadoop, Amazon, Redshift |

| Avro | Binary primary | No | Hadoop |

| Protobuf | Binary primary | No | Google, TensorFlow |

| Pickle | Binary | No | Python, PyTorch |

JSON

JSON, JavaScript Object Notation, is everywhere. Even though it was derived from JavaScript, it’s language-independent—most modern programming languages can generate and parse JSON. It’s human-readable. Its key-value pair paradigm is simple but powerful, capable of handling data of different levels of structuredness. For example, your data can be stored in a structured format like the following :

{

"firstName": "Boatie",

"lastName": "McBoatFace",

"isVibing": true,

"age": 12,

"address": {

"streetAddress": "12 Ocean Drive",

"city": "Port Royal",

"postalCode": "10021-3100"

}

}

Row-Major VS Column-Major Format

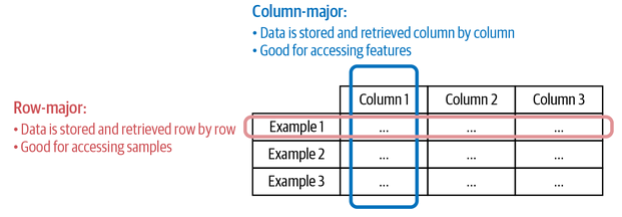

The two formats that are common and represent two distinct paradigms are CSV and Parquet. CSV (comma-separated values) is row-major, which means consecutive elements in a row are stored next to each other in memory. Parquet is column-major, which means consecutive elements in a column are stored next to each other. Data_Format.png

Column-major formats allow flexible column-based reads, especially if your data is large with thousands, if not millions, of features. Row-major formats allow faster data writes. Overall, row-major formats are better when you have to do a lot of writes, whereas column-major ones are better when you have to do a lot of column-based reads.

Text VS Binary Format

CSV and JSON are text files, whereas Parquet files are binary files. Text files are files that are in plain text, which usually means they are human-readable. Binary files are the catchall that refers to all nontext files. As the name suggests, binary files are typically files that contain only 0s and 1s, and are meant to be read or used by programs that know how to interpret the raw bytes. Binary files are more compact. Here’s a simple example to show how binary files can save space compared to text files. Consider that you want to store the number 1000000. If you store it in a text file, it’ll require 7 characters, and if each character is 1 byte, it’ll require 7 bytes. If you store it in a binary file as int32, it’ll take only 32 bits or 4 bytes.

Data Models

Data models describe how data is represented. Consider cars in the real world. In a database, a car can be described using its make, its model, its year, its color, and its price. These attributes make up a data model for cars. Alternatively, you can also describe a car using its owner, its license plate, and its history of registered addresses. This is another data model for cars. How you choose to represent data not only affects the way your systems are built, but also the problems your systems can solve.

Relational Model

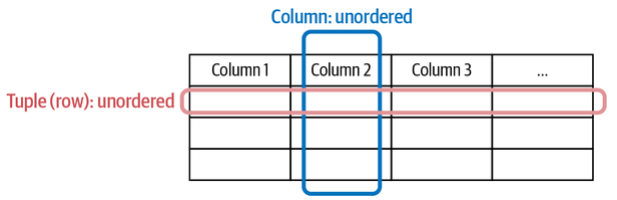

In this model, data is organized into relations; each relation is a set of tuples. A table is an accepted visual representation of a relation, and each row of a table makes up a tuple. Relations are unordered. You can shuffle the order of the rows or the order of the columns in a relation and it’s still the same relation. Data following the relational model is usually stored in file formats like CSV or Parquet. It’s often desirable for relations to be normalized. Data normalization can follow normal forms such as the first normal form (1NF), second normal form (2NF), etc.

One major downside of normalization is that your data is now spread across multiple relations. You can join the data from different relations back together, but joining can be expensive for large tables. Databases built around the relational data model are relational databases. Once you’ve put data in your databases, you’ll want a way to retrieve it. The language that you can use to specify the data that you want from a database is called a query language. The most popular query language for relational databases today is SQL.

The most important thing to note about SQL is that it’s a declarative language, as opposed to Python, which is an imperative language. In the imperative paradigm, you specify the steps needed for an action and the computer executes these steps to return the outputs. In the declarative paradigm, you specify the outputs you want, and the computer figures out the steps needed to get you the queried outputs.

NoSQL

The latest movement against the relational data model is NoSQL. Originally started as a hashtag for a meetup to discuss nonrelational databases, NoSQL has been retroactively reinterpreted as Not Only SQL, as many NoSQL data systems also support relational models. Two major types of nonrelational models are the document model and the graph model. The document model targets use cases where data comes in self-contained documents and relationships between one document and another are rare. The graph model goes in the opposite direction, targeting use cases where relationships between data items are common and important.

-

Document Model - The document model is built around the concept of “document.” A document is often a single continuous string, encoded as JSON, XML, or a binary format like BSON (Binary JSON). All documents in a document database are assumed to be encoded in the same format. Each document has a unique key that represents that document, which can be used to retrieve it. A collection of documents could be considered analogous to a table in a relational database, and a document analogous to a row. Because the document model doesn’t enforce a schema, it’s often referred to as schemaless. The document model has better locality than the relational model. However, compared to the relational model, it’s harder and less efficient to execute joins across documents compared to across tables.

-

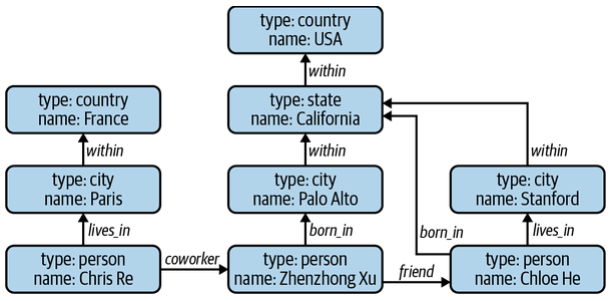

Graph Model - The graph model is built around the concept of a “graph.” A graph consists of nodes and edges, where the edges represent the relationships between the nodes. A database that uses graph structures to store its data is called a graph database. If in document databases, the content of each document is the priority, then in graph databases, the relationships between data items are the priority. Because the relationships are modeled explicitly in graph models, it’s faster to retrieve data based on relationships.

Structured VS Unstructured Data

Structured data follows a predefined data model, also known as a data schema. For example, the data model might specify that each data item consists of two values: the first value, “name,” is a string of at most 50 characters, and the second value, “age,” is an 8-bit integer in the range between 0 and 200. The predefined structure makes your data easier to analyze. If you want to know the average age of people in the database, all you have to do is to extract all the age values and average them out. The disadvantage of structured data is that you have to commit your data to a predefined schema. If your schema changes, you’ll have to retrospectively update all your data, often causing mysterious bugs in the process.

Unstructured data doesn’t adhere to a predefined data schema. It’s usually text but can also be numbers, dates, images, audio, etc. For example, a text file of logs generated by your ML model is unstructured data. Even though unstructured data doesn’t adhere to a schema, it might still contain intrinsic patterns that help you extract structures. Unstructured data also allows for more flexible storage options. For example, if your storage follows a schema, you can only store data following that schema. But if your storage doesn’t follow a schema, you can store any type of data.

A repository for storing structured data is called a data warehouse. A repository for storing unstructured data is called a data lake. Data lakes are usually used to store raw data before processing. Data warehouses are used to store data that has been processed into formats ready to be used.

| Structured Data | Unstructured Data |

|---|---|

| Schema clearly defined | Data doesn’t have to follow a schema |

| Easy to search and analyze | Fast arrival |

| Can only handle data with specific schema | Can handle data from any source |

| Schema changes will cause a lot of troubles | No need to worry about schema changes |

| Stored in data warehouses | stored in data lakes |

Data Storage Engines and Processing

Data formats and data models specify the interface for how users can store and retrieve data. Storage engines, also known as databases, are the implementation of how data is stored and retrieved on machines.

Transactional and Analytical Processing

The transactions are inserted as they are generated, and occasionally updated when something changes, or deleted when they are no longer needed. This type of processing is known as online transaction processing (OLTP). Because these transactions often involve users, they need to be processed fast (low latency) so that they don’t keep users waiting. The processing method needs to have high availability—that is, the processing system needs to be available any time a user wants to make a transaction. Transactional databases are designed to process online transactions and satisfy the low latency, high availability requirements. It follows ACID properties (Atomicity, Consistency, Isolation, Durability).

Because each transaction is often processed as a unit separately from other transactions, transactional databases are often row-major. This also means that transactional databases might not be efficient for questions such as “What’s the average price for all the rides in September in San Francisco?” This kind of analytical question requires aggregating data in columns across multiple rows of data. Analytical databases are designed for this purpose. They are efficient with queries that allow you to look at data from different viewpoints. We call this type of processing online analytical processing.

However, both the terms OLTP and OLAP have become outdated. First, the separation of transactional and analytical databases was due to limitations of technology—it was hard to have databases that could handle both transactional and analytical queries efficiently. However, this separation is being closed. Today, we have transactional databases that can handle analytical queries. Second, in the traditional OLTP or OLAP paradigms, storage and processing are tightly coupled—how data is stored is also how data is processed. This may result in the same data being stored in multiple databases and using different processing engines to solve different types of queries. Third, “online” has become an overloaded term that can mean many different things. Online used to just mean “connected to the internet.” Then, it grew to also mean “in production”—we say a feature is online after that feature has been deployed in production.

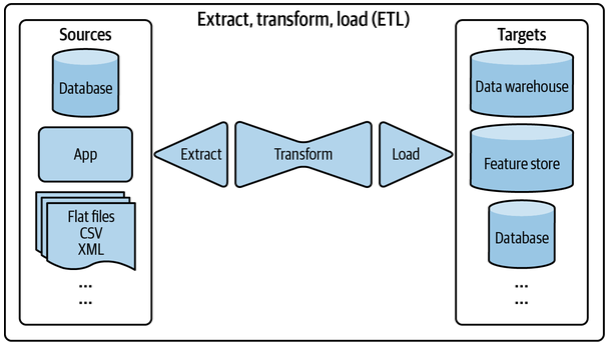

ETL : Extract, Transform and Load

When data is extracted from different sources, it’s first transformed into the desired format before being loaded into the target destination such as a database or a data warehouse. This process is called ETL, which stands for extract, transform, and load. ETL refers to the general purpose processing and aggregating of data into the shape and the format that you want.

Extract is extracting the data you want from all your data sources. Some of them will be corrupted or malformatted. In the extracting phase, you need to validate your data and reject the data that doesn’t meet your requirements. Transform is the meaty part of the process, where most of the data processing is done. You might want to join data from multiple sources and clean it. You might want to standardize the value ranges. Load is deciding how and how often to load your transformed data into the target destination, which can be a file, a database, or a data warehouse.

Modes of Dataflow

When data is passed from one process to another, we say that the data flows from one process to another, which gives us a dataflow. There are three main modes of dataflow :

- Data passing through databases

- Data passing through services using requests such as the requests provided by REST and RPC APIs (e.g., POST/GET requests)

- Data passing through a real-time transport like Apache Kafka and Amazon Kinesis

Data Passing through Databases

To pass data from process A to process B, process A can write that data into a database, and process B simply reads from that database. This mode, however, doesn’t always work because of two reasons. First, it requires that both processes must be able to access the same database. This might be infeasible, especially if the two processes are run by two different companies. Second, it requires both processes to access data from databases, and read/write from databases can be slow, making it unsuitable for applications with strict latency requirements—e.g., almost all consumer-facing applications.

Data passing through Services

One way to pass data between two processes is to send data directly through a network that connects these two processes. To pass data from process B to process A, process A first sends a request to process B that specifies the data A needs, and B returns the requested data through the same network. Because processes communicate through requests, we say that this is request-driven. This mode of data passing is tightly coupled with the service-oriented architecture.

Two services in communication with each other can also be parts of the same application. Structuring different components of your application as separate services allows each component to be developed, tested, and maintained independently of one another. Structuring an application as separate services gives you a microservice architecture. The most popular styles of requests used for passing data through networks are REST (representational state transfer) and RPC (remote procedure call).

Data passing through Real-Time Transport

Interservice data passing can blow up and become a bottleneck, slowing down the entire system. Request-driven data passing is synchronous: the target service has to listen to the request for the request to go through. A service that is down can cause all services that require data from it to be down.

What if there’s a broker that coordinates data passing among services? Instead of having services request data directly from each other and creating a web of complex interservice data passing, each service only has to communicate with the broker. Instead of using databases to broker data, we use in-memory storage to broker data. Real-time transports can be thought of as in-memory storage for data passing among services. A piece of data broadcast to a real-time transport is called an event. This architecture is, therefore, also called event-driven. A real-time transport is sometimes called an event bus.

Request-driven architecture works well for systems that rely more on logic than on data. Event-driven architecture works better for systems that are data-heavy.

The two most common types of real-time transports are pubsub, which is short for publish-subscribe, and message queue. In the pubsub model, any service can publish to different topics in a real-time transport, and any service that subscribes to a topic can read all the events in that topic. In a message queue model, an event often has intended consumers (an event with intended consumers is called a message), and the message queue is responsible for getting the message to the right consumers.

Examples of pubsub solutions are Apache Kafka and Amazon Kinesis. Examples of message queues are Apache RocketMQ and RabbitMQ.

Batch Processing VS Stream Processing

Once your data arrives in data storage engines like databases, data lakes, or data warehouses, it becomes historical data. This is opposed to streaming data (data that is still streaming in). Historical data is often processed in batch jobs—jobs that are kicked off periodically. When data is processed in batch jobs, we refer to it as batch processing.

When you have data in real-time transports like Apache Kafka and Amazon Kinesis, we say that you have streaming data. Stream processing refers to doing computation on streaming data. Computation on streaming data can also be kicked off periodically, but the periods are usually much shorter than the periods for batch jobs (e.g., every five minutes instead of every day). Computation on streaming data can also be kicked off whenever the need arises.

Stream processing, when done right, can give low latency because you can process data as soon as data is generated, without having to first write it into databases. With stream processing, it’s possible to continue computing only the new data each day and joining the new data computation with the older data computation, preventing redundancy.

Because batch processing happens much less frequently than stream processing, in ML, batch processing is usually used to compute features that change less often. Batch features—features extracted through batch processing—are also known as static features. Stream processing is used to compute features that change quickly. Streaming features—features extracted through stream processing—are also known as dynamic features.